We have recently released a few products with optical flow sensors (the Flow deck and the Flow Breakout board) without really talking about the concept of optical flow. So we though we would dedicate this weeks post to it.

The most common example of optical flow is probably a computer mouse. Turning the mouse over you’ll see a strong light that’s used to illuminate the surface so that a camera can clearly see the surface. When running, the camera will identify features in the surface below it and track their motion between frames. As you move the mouse to the left, features will move to the right.

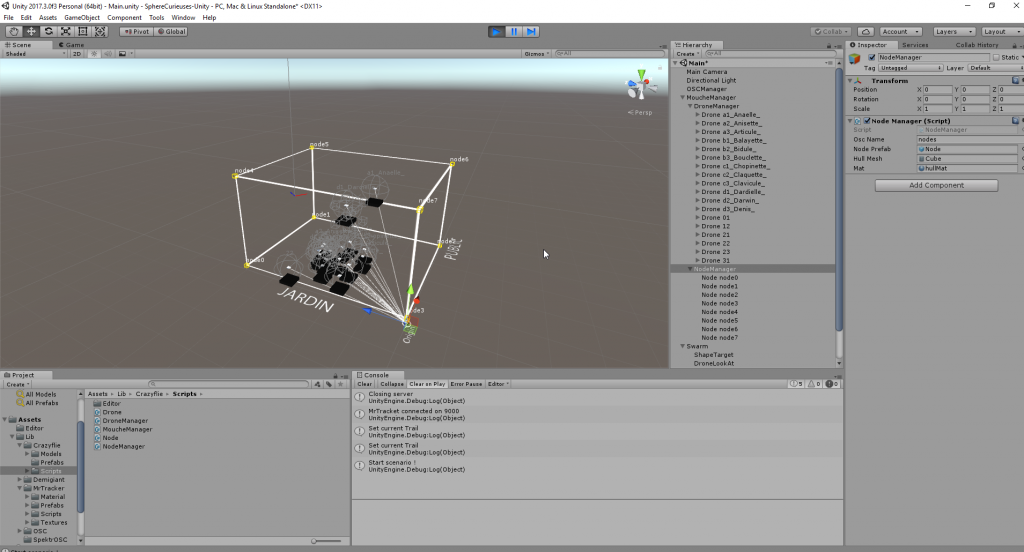

In the example below you can see a feature being tracked over time.

The feature is tracked from frame to frame and the output is the distance that the feature moved since the previous frame.

The functionality of an optical flow sensor of course depends on being able to find features to track, a surface that is very uniform will be hard to track since all the frames will look the same. If you’ve ever tried using a mouse on a glass table or reflective surface you’ve probably seen that it doesn’t work.

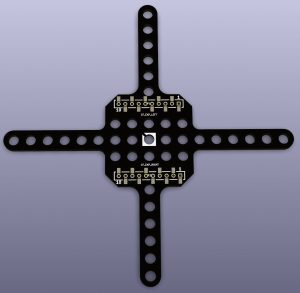

The same concept is used in our Flow products. It also happens that the manufacturer of the chip we use, PixArt, is a world leader in optical mouse sensors. They have applied the same concepts as for the mouse but with a different lens that gives the camera the ability to track features further away (80mm – inf). Like the mouse this is dependent on finding features to track, which might be problematic on poorly lit surfaces or on surfaces that are very uniformly colored. On the other hand if the area is too lit up from the ceiling above you when you fly you might start tracking your own shadow on the floor.

One of the issues with using optical tracking from a flying platform is that you need to know the distance to the features. In the case of the mouse you will know that the features are right under the mouse, but in the case of the flying platform you won’t know this from only looking at the image. Think about sitting on a plane and watching the ground move, it’s really slow. But your movement along the ground will actually be really fast. For our Flow products we’ve added the VL53L0x ToF distance sensor to measure the distance to the surface that’s being tracked. This completest the equation so if you’re further away from the features that are being tracked this will be taken into account. Note that the accuracy of the tracking will decrease when the distance increases since the difference between frames becomes smaller and harder to detect.

An optical flow sensor can also be used to track motion of other objects instead. Suppose the optical flow sensor is fixed and pointing sideways, then it will detect objects passing in front of it, for instance counting people passing a doorway, or it could be used as a touch less mouse.